From Splunk Wiki

- Splunk Search Command Cheat Sheet

- Splunk Spl Cheat Sheet

- Splunk Quick Reference Sheet

- Splunk Search Query Cheat Sheet

As you learn about Splunk SPL, you might hear the terms streaming, generating, transforming, orchestrating, and data processing used to describe the types of search commands. This topic explains what these terms mean and lists the commands that fall into each category. The Splunk Machine Learning Toolkit App delivers new SPL commands, custom visualizations, assistants, and examples to explore a variety of ml concepts. Each assistant includes end-to-end examples with datasets, plus the ability to apply the visualizations and SPL commands to your own data. Cheat sheets for Splunk administration, data on-boarding, App development, search head clustering, and syslog configurations. Here is a cheat sheet to help you remember. Architecture/ Deployment. Dedicated Deployment Server and license Master - If your install grows beyond just a single Splunk instance (talking indexers & search heads here, not forwarders), set up a separate server to be a license manager & Deployment server.

You've been there before, having spent hours getting your Splunk set up just right. Then you hear in #splunk an idea that would have made your job much easier, had you only known it then. All of these tidbits of knowledge could make a pretty nice .conf presentation one day, so share them here.

Note: The contents of this page have been contributed by Splunk Luminaries and Customers Who Inhabit the #splunk IRC Channel on EFNET. Feel free to join us there for further discussion. :)

Testing and troubleshooting

- Configure the Monitoring Console (the artist formerly known as 'DMC', now just 'MC'): https://docs.splunk.com/Documentation/Splunk/7.1.0/DMC/DMCoverview

- Use btool to troubleshoot config - Splunk has a large configuration surface, with many opportunities to set the same configuration in different locations. The splunk command-line utility 'btool' is incredibly helpful. http://docs.splunk.com/Documentation/Splunk/latest/Troubleshooting/Usebtooltotroubleshootconfigurations . It gives you a merged view of all the configuration files.

- Make an index for test data that you can delete anytime you need to. Any new datatypes you haven't imported previously should be 'smoke-tested' here. Date extractions and event breaking are important to get right and a new data type that isn't handled properly can cause big issues if not caught till later.

- Setup more than one index think will one set of users need access but not another? Will I want to retain data longer or shorter than other data? Could a run away process rapidly exceed my tolerance for storage.

- Make a test environment. Never test index-time changes in the production environment. A VM is fine. DO IT. NOW!

Data input and indexing

- In line with the above, for each input *ALWAYS* declare sourcetype and index. Splunk may be very good at detecting timestamps of various formats, but don't leave your sourcetype naming to chance.

- For monitored directories of mixed sourcetypes, still declare the sourcetypes explicitly, just by file pattern in props.conf

- For any applications that write their logs with a datestamp (IIS, Tomcat's localhost_access_log, etc), create a transforms entry to strip the datestamp from the source name. This will make it easier on the user, not needing to wildcard source names, as well as not creating endless numbers of unique sources. Splunk likes to search

source=/my/path/to/localhost_access_logfar more thansource=/my/path/to/localhost_access*- However, don't go overboard in folding away information for source. If you have to troubleshoot the data path, you'll want to be able to have some idea which actual files the data comes from. Consider copying the original source to an indexed field.

- Syslog - If you plan to receive syslog messages via tcp or udp, resist the urge to have Splunk listen for it. You'll invariably need to restart Splunk for various config changes you make, while a seperate rsyslog or syslog-ng daemon will simply hum along continuing to receive data while you're applying Splunk changes.

- Scripted inputs - If you have a scripted input that grabs a lot of data (think DBX, or OPSEC LEA), careful about where you add it. If you have that input running on an indexer, all of the events it generates will reside on only that indexer. This means you won't be taking advantage of distributed Splunk searching.

- The events are parsed on the first instance that can parse, so usually the indexers or the heavy-forwarders. So you want to put your props/transforms for the index time parsing on them. Exception, since splunk 6.*, the structured data (csv, iis, json) are parsed on the forwarder (see INDEXED_EXTRACTIONS).

- If you are using a volume configuration for hot and cold, make sure that those volumes point to locations outside of $SPLUNK_DB or you will most likely end up with warnings about overlapping configurations.

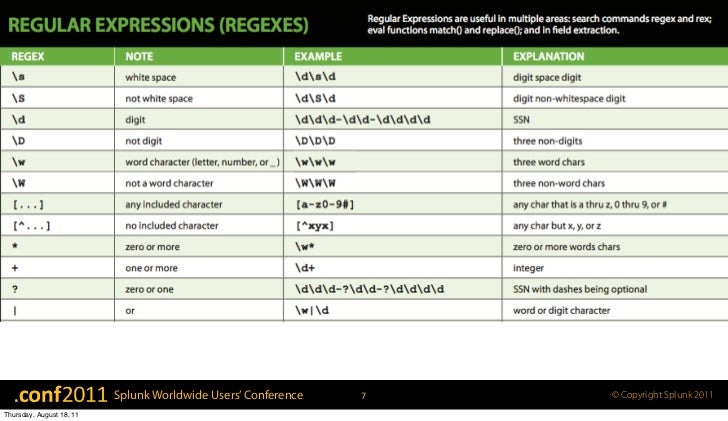

- When you are onboarding new data, make sure that you (or that the TA you are using) is configured to properly extract time stamps (TIME_PREFIX, MAX_TIMESTAMP_LOOKAHEAD, TIME_FORMAT), and that you are using a LINE_BREAKER and disabling line merging (SHOULD_LINEMERGE = false). These settings have significant performance implications (see https://conf.splunk.com/files/2016/slides/observations-and-recommendations-on-splunk-performance.pdf and https://conf.splunk.com/files/2016/slides/worst-practices-and-how-to-fix-them.pdf). Here is a cheat sheet to help you remember.

Architecture/ Deployment

- Dedicated Deployment Server and license Master - If your install grows beyond just a single Splunk instance (talking indexers & search heads here, not forwarders), set up a separate server to be a license manager & Deployment server. A VM is fine for these purposes, and can actually be a benefit to be on a VM. You can even use the tarball install to put these multiple instances on the same box/VM. Say one in

/opt/splunk, one in/opt/deploymentserver, and one in/opt/licenseserver. Run them on different ports of course.

- Indexers WILL need more than 1024 file descriptors, which is the default setting on most Linux distros. Remember, each connected forwarder needs one descriptor. And each index will need as many as 8 or more descriptors. You can quickly run out, so go ahead and set it higher - 10,240 minimum, and perhaps as many as 65536. You can use the SOS (Splunk On Splunk) 'Splunk File Descriptor Usage' view to track file descriptor usage over time and prevent an outage from running out.

- When upgrading your deployment servers to 6.0, be aware that the

serverclass.confsettingmachineTypeswas deprecated in 5.0, and is removed in 6.0. You should now be usingmachineTypesFilter.

- If running Splunk indexers or search heads on RHEL6 or its derivatives CentOS6 / OEL6 - turn off Transparent Huge Pages (THP). This has a profound impact on system load. There's a decent Oracle Blog about it at https://blogs.oracle.com/linux/entry/performance_issues_with_transparent_huge. (Thanks Baconesq!) Update 2014-01-21 -- Splunk 5.0.7 includes a check for THPs and should warn you about them (Splunk BugID SPL-75912)

- In Oracle Linux version 6.5 Transparent HugePages have been removed.

- Use DNS CNAMEs for things like your deployment server and your license master. That way, if you need to move it later it's not entirely painful.

- Use apps for configuration, especially of forwarders. Even if you aren't starting out with deployment server, putting all of your configuration in

/opt/splunkforwarder/etc/system/localwill make it much harder when you do start to use it. Put that config in/opt/splunkforwarder/etc/apps/myinputsinstead, and then you're in a better position later if you do decide to push that app.

- Along with the above, put your

deploymentclient.confinformation into its own app. When installing a forwarder, drop your 'deploymentclient' app into$SPLUNK_HOME/etc/appsto bootstrap your deployment server connection. Then, push a new copy of that app out via deployment server. Now, if you need to split your deployment server clients in half you can do it using deployment server.

- For forwarders, put

outputs.confand any corresponding certs in an app. Makes life easier when the time comes to renew the certs, or when you add extra indexers. In fact when installing a forwarder, the only config it needs is the instruction on where to find the rest of the configs. See above.

- Remember that REST API that you had all the ideas for hooking it up to other systems? Why not use it for troubleshooting? Create a troubleshooter user on your forwarders, and you can grab information about them. If you want to 'outsource' support of forwarders to other personnel within your organization, they can use this method to start diagnosing issues. Here's an example of what the TailingProcessors sees for files:

curl https://myhost:8089/services/admin/inputstatus/TailingProcessor:FileStatus -k -u 'troubleshooter:mypassword'

- When installing lots of splunk instances, you can use

user-seed.confto set the default password for the admin account to whatever you need it to be. The file needs to exist prior to starting Splunk for the first time, and will be deleted by Splunk after it is used. In fact, it would be easy enough to also haveuser-seed.confinclude yourtroubleshooteruser from up above as well.

- Filesystem / Disk layouts - Use Logical Volume Manager (LVM) on linux. At a minimum, make two LVs - one for

/opt/splunkand one for/opt/splunk/var/lib/splunk. With this approach, you can use LVM snapshots for backups while Splunk remains running. Also, snapshots before software upgrades can make rollbacks (if necessary) easier.

Searches / UI

- Subsearching/using lookup tables are great! Be wary of result limits of using both, however, especially when dashboarding.

- Find a cool visual (chart/dashboard/form) from one of the apps and want to learn how to do them? Poke around the XML to see how the data is parsed/filtered/transformed. Gives you great ideas to customize for your own purposes.

- When developing searches and dashboards, if you change around your XML or certain .conf files directly, you don't always have to restart Splunk for it to take effect. Often enough you can just hit the

/debug/refreshURL and that'll reload any changed XML and some .conf files.

- If you have a database background - ensure that you read up on how

statsworks. Really. Otherwise, your searches will contain morejoinsthan are needed. Also, read Splunk for SQL users

- Splunk's DB Connect (aka DBX) App (http://apps.splunk.com/app/958/) is wonderful for interacting with databases. You'll have much better (faster) luck running scheduled DB queries and writing the results to a lookup table using '| outputlookup' that Splunk references rather than doing 'live' lookups from all your search heads to your databases. Splunk handles large lookup tables elegantly, don't worry about it. (h/t to Baconesq and Duckfez)

- Be as specific as possible when creating searches. Specify indexes, sourcetype, etc...if you know them.

- Since it so easy to build and extend a dashboard in Splunk, it is very tempting to put LOTS of searches/panels on a single dashboard. But you should note that most users won't scroll down to see the cool stuff at the bottom and thus you are wasting server capacity running searches that users will likely not see. Consider breaking the dashboards into smaller ones that focus on either different parts of, uses of, layers of, etc and linking them together with creative use of click-throughs or simple HTML links.

Other References

- Aplura (which is a Splunk partner) has also made their Best Practices document available Aplura's Splunk Best Practices

From Splunk Wiki

You've been there before, having spent hours getting your Splunk set up just right. Then you hear in #splunk an idea that would have made your job much easier, had you only known it then. All of these tidbits of knowledge could make a pretty nice .conf presentation one day, so share them here.

Note: The contents of this page have been contributed by Splunk Luminaries and Customers Who Inhabit the #splunk IRC Channel on EFNET. Feel free to join us there for further discussion. :)

Testing and troubleshooting

- Configure the Monitoring Console (the artist formerly known as 'DMC', now just 'MC'): https://docs.splunk.com/Documentation/Splunk/7.1.0/DMC/DMCoverview

- Use btool to troubleshoot config - Splunk has a large configuration surface, with many opportunities to set the same configuration in different locations. The splunk command-line utility 'btool' is incredibly helpful. http://docs.splunk.com/Documentation/Splunk/latest/Troubleshooting/Usebtooltotroubleshootconfigurations . It gives you a merged view of all the configuration files.

- Make an index for test data that you can delete anytime you need to. Any new datatypes you haven't imported previously should be 'smoke-tested' here. Date extractions and event breaking are important to get right and a new data type that isn't handled properly can cause big issues if not caught till later.

- Setup more than one index think will one set of users need access but not another? Will I want to retain data longer or shorter than other data? Could a run away process rapidly exceed my tolerance for storage.

- Make a test environment. Never test index-time changes in the production environment. A VM is fine. DO IT. NOW!

Data input and indexing

- In line with the above, for each input *ALWAYS* declare sourcetype and index. Splunk may be very good at detecting timestamps of various formats, but don't leave your sourcetype naming to chance.

- For monitored directories of mixed sourcetypes, still declare the sourcetypes explicitly, just by file pattern in props.conf

- For any applications that write their logs with a datestamp (IIS, Tomcat's localhost_access_log, etc), create a transforms entry to strip the datestamp from the source name. This will make it easier on the user, not needing to wildcard source names, as well as not creating endless numbers of unique sources. Splunk likes to search

source=/my/path/to/localhost_access_logfar more thansource=/my/path/to/localhost_access*- However, don't go overboard in folding away information for source. If you have to troubleshoot the data path, you'll want to be able to have some idea which actual files the data comes from. Consider copying the original source to an indexed field.

- Syslog - If you plan to receive syslog messages via tcp or udp, resist the urge to have Splunk listen for it. You'll invariably need to restart Splunk for various config changes you make, while a seperate rsyslog or syslog-ng daemon will simply hum along continuing to receive data while you're applying Splunk changes.

- Scripted inputs - If you have a scripted input that grabs a lot of data (think DBX, or OPSEC LEA), careful about where you add it. If you have that input running on an indexer, all of the events it generates will reside on only that indexer. This means you won't be taking advantage of distributed Splunk searching.

- The events are parsed on the first instance that can parse, so usually the indexers or the heavy-forwarders. So you want to put your props/transforms for the index time parsing on them. Exception, since splunk 6.*, the structured data (csv, iis, json) are parsed on the forwarder (see INDEXED_EXTRACTIONS).

- If you are using a volume configuration for hot and cold, make sure that those volumes point to locations outside of $SPLUNK_DB or you will most likely end up with warnings about overlapping configurations.

- When you are onboarding new data, make sure that you (or that the TA you are using) is configured to properly extract time stamps (TIME_PREFIX, MAX_TIMESTAMP_LOOKAHEAD, TIME_FORMAT), and that you are using a LINE_BREAKER and disabling line merging (SHOULD_LINEMERGE = false). These settings have significant performance implications (see https://conf.splunk.com/files/2016/slides/observations-and-recommendations-on-splunk-performance.pdf and https://conf.splunk.com/files/2016/slides/worst-practices-and-how-to-fix-them.pdf). Here is a cheat sheet to help you remember.

Architecture/ Deployment

- Dedicated Deployment Server and license Master - If your install grows beyond just a single Splunk instance (talking indexers & search heads here, not forwarders), set up a separate server to be a license manager & Deployment server. A VM is fine for these purposes, and can actually be a benefit to be on a VM. You can even use the tarball install to put these multiple instances on the same box/VM. Say one in

/opt/splunk, one in/opt/deploymentserver, and one in/opt/licenseserver. Run them on different ports of course.

- Indexers WILL need more than 1024 file descriptors, which is the default setting on most Linux distros. Remember, each connected forwarder needs one descriptor. And each index will need as many as 8 or more descriptors. You can quickly run out, so go ahead and set it higher - 10,240 minimum, and perhaps as many as 65536. You can use the SOS (Splunk On Splunk) 'Splunk File Descriptor Usage' view to track file descriptor usage over time and prevent an outage from running out.

- When upgrading your deployment servers to 6.0, be aware that the

serverclass.confsettingmachineTypeswas deprecated in 5.0, and is removed in 6.0. You should now be usingmachineTypesFilter.

- If running Splunk indexers or search heads on RHEL6 or its derivatives CentOS6 / OEL6 - turn off Transparent Huge Pages (THP). This has a profound impact on system load. There's a decent Oracle Blog about it at https://blogs.oracle.com/linux/entry/performance_issues_with_transparent_huge. (Thanks Baconesq!) Update 2014-01-21 -- Splunk 5.0.7 includes a check for THPs and should warn you about them (Splunk BugID SPL-75912)

- In Oracle Linux version 6.5 Transparent HugePages have been removed.

- Use DNS CNAMEs for things like your deployment server and your license master. That way, if you need to move it later it's not entirely painful.

- Use apps for configuration, especially of forwarders. Even if you aren't starting out with deployment server, putting all of your configuration in

/opt/splunkforwarder/etc/system/localwill make it much harder when you do start to use it. Put that config in/opt/splunkforwarder/etc/apps/myinputsinstead, and then you're in a better position later if you do decide to push that app.

- Along with the above, put your

deploymentclient.confinformation into its own app. When installing a forwarder, drop your 'deploymentclient' app into$SPLUNK_HOME/etc/appsto bootstrap your deployment server connection. Then, push a new copy of that app out via deployment server. Now, if you need to split your deployment server clients in half you can do it using deployment server.

- For forwarders, put

outputs.confand any corresponding certs in an app. Makes life easier when the time comes to renew the certs, or when you add extra indexers. In fact when installing a forwarder, the only config it needs is the instruction on where to find the rest of the configs. See above.

- Remember that REST API that you had all the ideas for hooking it up to other systems? Why not use it for troubleshooting? Create a troubleshooter user on your forwarders, and you can grab information about them. If you want to 'outsource' support of forwarders to other personnel within your organization, they can use this method to start diagnosing issues. Here's an example of what the TailingProcessors sees for files:

curl https://myhost:8089/services/admin/inputstatus/TailingProcessor:FileStatus -k -u 'troubleshooter:mypassword'

- When installing lots of splunk instances, you can use

user-seed.confto set the default password for the admin account to whatever you need it to be. The file needs to exist prior to starting Splunk for the first time, and will be deleted by Splunk after it is used. In fact, it would be easy enough to also haveuser-seed.confinclude yourtroubleshooteruser from up above as well.

- Filesystem / Disk layouts - Use Logical Volume Manager (LVM) on linux. At a minimum, make two LVs - one for

/opt/splunkand one for/opt/splunk/var/lib/splunk. With this approach, you can use LVM snapshots for backups while Splunk remains running. Also, snapshots before software upgrades can make rollbacks (if necessary) easier.

Searches / UI

- Subsearching/using lookup tables are great! Be wary of result limits of using both, however, especially when dashboarding.

- Find a cool visual (chart/dashboard/form) from one of the apps and want to learn how to do them? Poke around the XML to see how the data is parsed/filtered/transformed. Gives you great ideas to customize for your own purposes.

- When developing searches and dashboards, if you change around your XML or certain .conf files directly, you don't always have to restart Splunk for it to take effect. Often enough you can just hit the

/debug/refreshURL and that'll reload any changed XML and some .conf files.

- If you have a database background - ensure that you read up on how

statsworks. Really. Otherwise, your searches will contain morejoinsthan are needed. Also, read Splunk for SQL users

- Splunk's DB Connect (aka DBX) App (http://apps.splunk.com/app/958/) is wonderful for interacting with databases. You'll have much better (faster) luck running scheduled DB queries and writing the results to a lookup table using '| outputlookup' that Splunk references rather than doing 'live' lookups from all your search heads to your databases. Splunk handles large lookup tables elegantly, don't worry about it. (h/t to Baconesq and Duckfez)

- Be as specific as possible when creating searches. Specify indexes, sourcetype, etc...if you know them.

Splunk Search Command Cheat Sheet

- Since it so easy to build and extend a dashboard in Splunk, it is very tempting to put LOTS of searches/panels on a single dashboard. But you should note that most users won't scroll down to see the cool stuff at the bottom and thus you are wasting server capacity running searches that users will likely not see. Consider breaking the dashboards into smaller ones that focus on either different parts of, uses of, layers of, etc and linking them together with creative use of click-throughs or simple HTML links.

Splunk Spl Cheat Sheet

Other References

Splunk Quick Reference Sheet

- Aplura (which is a Splunk partner) has also made their Best Practices document available Aplura's Splunk Best Practices

Splunk Search Query Cheat Sheet